The end of taxonomy

How AI is going to upend the foundations of legacy programmatic ad tech

Twenty years ago it was legit hard to build tech at internet scale: the first Right Media ad server ran on a custom-compiled Gentoo OS because we had to patch an alpha Linux 2.6 kernel to make it handle concurrent connections properly.

We made a lot of compromises to make things actually work given the limited memory, compute, and network resources we had. Our data science tools were rudimentary, and in fact nobody called it data science yet. We used GPUs to play video games!

One of the critical ways to make the incredibly complicated internet fit into our limited capabilities was to use taxonomies, that is to say, to categorize stuff. If you know that a web page is about Interior Decorating, presumably that tells you something about the person visiting the site - for instance, I promise you it is not me. If you click around the IAB Content Taxonomy, used by the majority of the ad tech industry, it’s both terrifyingly precise (infertility) and reductive (American football).

Enter AI

One thing that large language models are incredibly good at is processing and “understanding” content.

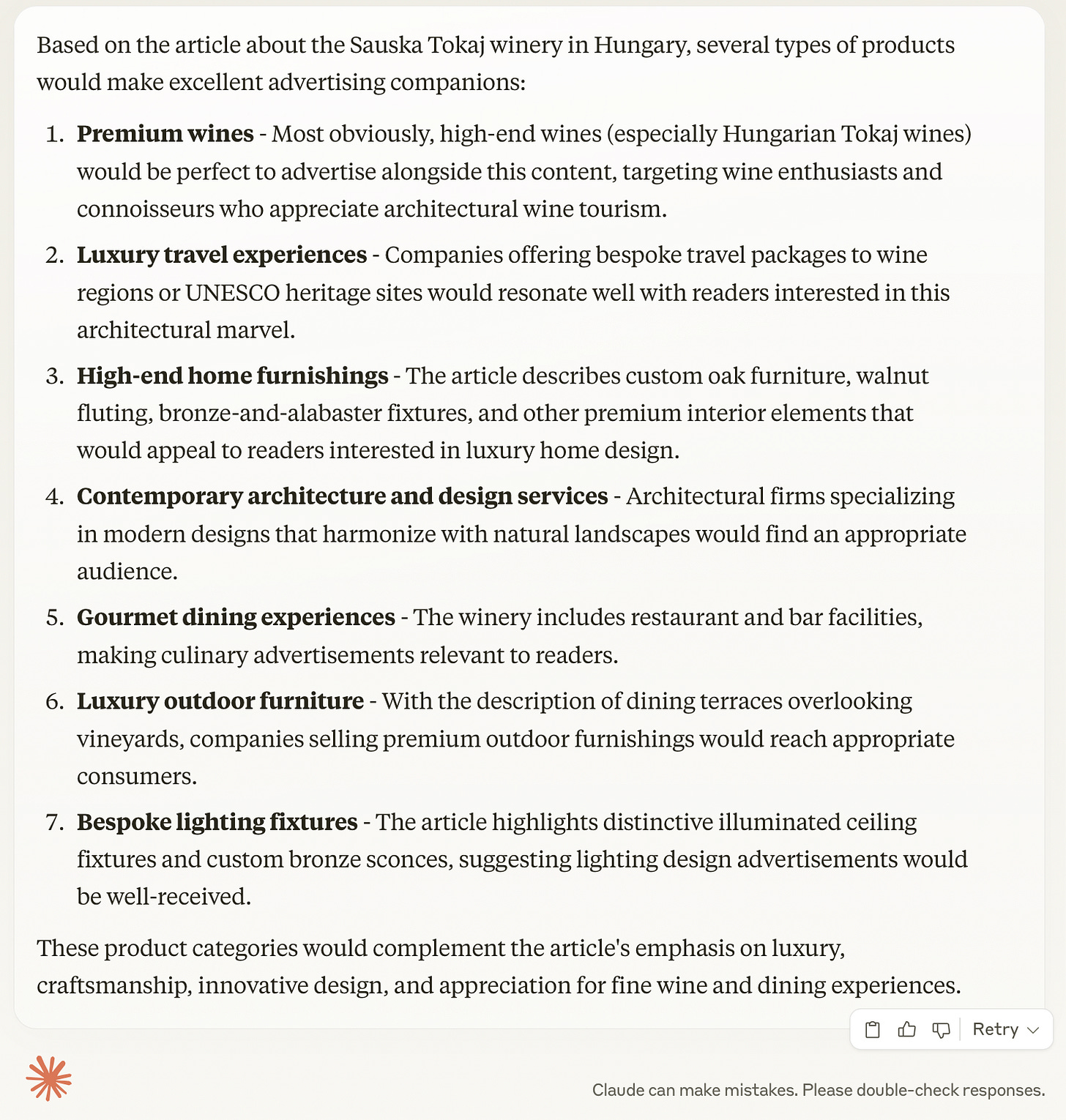

I found a random interior design article and copied the text and images into Claude and asked: “what kind of products do you think would make sense to advertise by this article?”

That is fantastic, right? I think a contextual targeting approach1 would definitely have caught 3, 4, 6, and 7. But premium wines - of course that makes sense for a winery - fine dining, and luxury travel… great ideas.

Taking a different approach, what if we ask about particular advertisers?

Again, brilliant response. Let’s go for a trifecta: What ad copy would you suggest for a United airlines ad on this page?

This is SO much better than trying to stick a keyword in some kind of dynamic creative solution. These are fantastic.

But you can’t run AI on every page of the internet. That’s crazy talk.

In 2023, it would have cost around $45M to run an LLM on every ad-supported page of the internet. Eighteen months later, more like $1M. Today, with DeepSeek, it’s $270K. By this time next year running an LLM on every page on the internet will cost less than $100K.

That’s not a whole lot of money if you’re an advertiser spending $1B a year on digital. It’s not a whole lot of money if you’re an advertiser spending $10M a year. It just means you need a few percent better performance to make it worth it. And if you look at the results above, it’s hard to imagine it’s not a lot better than most of the ads we see on the internet today.

How about from a sustainability lens? GPT-4 would have required 1,440 metric tons of CO2 for inference, whereas LLaMa 3.1 70b only 360 metric tons, and Deepseek v3 is likely much lower due to its mixture of experts architecture and FP8 precision. These numbers are going down less quickly than financial costs, likely due to increased competition and attempts to improve model performance, but still dropping impressively.

Introducing Context Agents

Every brand is going to have a Context Agent: a model that’s trained to figure out four things:

Is this content I want my ads next to? (brand suitability)

Is this content I’m excited to advertise next to? (brand alignment)

What key messages and products do I want to talk about on this page/app/show/channel?

What assets do I have (e.g. images, videos, product catalogs) that would be useful to create the optimal experience for this context?

The interface to a context agent is quite simple thanks to the ability of LLMs to handle complex input. An “artifact” representing a piece of content is presented in JSON format, and the agent is asked to return JSON in a simple format.

For instance:

Please assess whether this content is appropriate for United Airlines and whether it indicates propensity to purchase United Airlines products. If so, please suggest some key messages to use and products to recommend. Please respond with JSON in the format {"suitable": [true|false], "propensity": [low = 1, high = 10], "messages": ["message1", "message2"], "products": ["product1", "product2"], "reasoning": "an explanation"}.

Here's more detail about how to do this:

[CUSTOM PROMPT ABOUT THE BRAND]

Content:

{

"title": "UFO or Winery? This Contemporary Structure Stuns In Hungary",

"paragraphs": [

"How do you create an attention-grabbing modern building that integrates seamlessly with its timeless rural setting as though it has always belonged there? This conundrum faced Bord Architectural Studio and Tihany Design when they collaborated on Sauska Tokaj, a new hilltop winery and restaurant complex near Rátka, in Hungary’s UNESCO World Heritage-listed Tokaj-Hegyalja wine region. The resulting structure—a pair of limestone-clad saucers—is both strange and familiar, like a UFO that’s just touched down or is emerging, chrysalislike, from the earth. It’s almost as if the Hungarian countryside itself is giving birth to the next wave in contemporary architecture.",

...

],

"images": ["A house with a curved roof and a curved driveway", ...

]

}The agent would return something like:

{"suitable": true,

"propensity": 7,

"products": ["United flights to Budapest (gateway to Hungary's wine regions)",

"United Polaris business class for premium travel experience",

"United Vacations packages to Central Europe",

"United MileagePlus Dining program tie-ins with wine experiences"

],

"messages": [see above],

"reasoning": "The content discusses a high-end architectural destination in Hungary's prestigious wine region (UNESCO World Heritage site), suggesting an audience interested in luxury travel, culture, architecture, and fine dining/wine experiences. This aligns well with United Airlines' international service and premium offerings. The content appeals to affluent travelers who appreciate unique cultural experiences abroad - a demographic likely to book international flights and premium cabin services. The wine tourism angle also presents opportunities for vacation packages. The content itself is appropriate and non-controversial, focusing on architecture, design, and cultural appreciation."

}And this is just an off-the-shelf AI running a zero-shot prompt with no fine-tuning. Imagine this properly built around exactly what United is trying to accomplish with their marketing?

How do I build a Context Agent?

Option 1: build a crawler that can scrape all the public content on the internet; Train/prompt an LLM and run it on all the content, making sure to calibrate to exclude all of the unsafe and unsuitable content; push the resulting data into all of the ad tech platforms; and figure out how to include this in bidding and buying logic using all of the different custom algorithms and other tools available on each platform.

Option 2: skip all of that except the most important part: train/prompt an LLM.

At Scope3, we started with option 1. We built a crawler in 2022 for our initial sustainability model. We built real-time integrations into SSPs and DSPs to plug in our sustainability signals. As we added more data we added the ability to handle more complex decisioning logic.

When we acquired Adloox last fall, we decided to build a new product: Scope3 Brand Standards. The big idea was to create a bespoke brand suitability model for every brand: in effect, a Context Agent. Since we had much of the infrastructure already built to crawl and decision and integrate, we really just needed to figure out how to effectively prompt an LLM the right way. (To any Scope3 engineers reading this and either laughing hysterically or weeping into their keyboard - yes, I know it was way more complicated than this!)

Introducing the Scope3 Agentic Platform

Once we got the platform working for ourselves, why not build something that our clients and partners could use to build their own agents?

The platform consists of four main systems:

A content pipeline, which ingests content and sends it to Context Agents, then caches the results for real-time use

An audience pipeline, which ingests audiences from Audience Agents and caches them for real-time use

A custom algorithms pipeline, which ingests dimensions and weights from Optimization Agents for use in real-time decisioning

A real-time decisioning engine that connects these components to the ad tech ecosystem, plugging into DSPs, SSPs, and ad servers. The combination of algorithms, data, and integrations is a Media Agent.

Our goal is to enable every brand - along with its agency and ad tech partners - to build a bespoke Media Agent. If I could be so bold, we hope to enable the same kind of innovation that we created twenty years ago with the dawn of programmatic. Instead of the ad exchange, we have the agentic exchange: a platform to connect advertisers, agencies, publishers, networks, and ad tech companies at the cutting edge of technology to create unprecedented value.

A few of the big opportunities we see for the media agent ecosystem to unlock value:

Integrating audiences. Today we have data marketplaces with myriad providers and segments, often conflicting with each other. This is screaming for AI to map user characteristics to brands directly, without intermediating through segments.

Mining clean rooms. Similar to the above point, transactional data is messy and disjoint, especially across transactional platforms (think mapping a credit card transaction to a retail transaction, or even across retailers). Shouldn’t AI be able to create a synthetic shopper?

Custom algorithms. This is currently a niche capability that requires data science and complex integrations with each DSP. An optimization agent running on an agentic platform will be able to push signals into all of the places, including direct buys and native ads. The line between programmatic and direct is going to get very blurry very quickly.

Executing best practices. Most brands and agencies have centers of excellence that recommend best practices across their markets and teams, often informed by partners like Ebiquity. Using agents, these best practices can be checked on every media object across every platform, flagging opportunities to humans-in-the-loop, or even taking action directly.

Of course there are so many more areas where AI and agents can create value (and I’m explicitly not including orchestration, analytics, or other areas that are bigger than “just” media). I think we’ll see so many new ideas over the next few years, and I can’t wait to see this ecosystem emerge.

Back to my Gentoo Linux point - all of this is just barely possible today, and it requires a lot of money, carbon, and tuning to get right. But in a decade, or likely less, it’s going to be easy, cheap, sustainable, and everywhere.

Let’s get started!

I showed this to Anne Coghlan and she asked: is this article actually *about* interior design? It’s in Interior Design magazine… but this is the exact problem with taxonomies!

Brian, the minute you started talking about this on stage last week, I realized it was the productization of something I mused about on the MightyHive blog in 2018. As AI is able to interpret the subjective meaning of content (and the subjective experience of readers, viewers, and listeners), the execution of "context" in digital advertising takes on entirely new dimensions.

Maybe I'll substack about this. Haven't had the time yet!

https://medium.com/@mightyhive/contextual-targetings-coming-renaissance-76f3afb0ae73

AI is completely reshaping our relationship with information and so the way we generate, store, structure and present it. As a consequence, the existing web, which is basically an information retrieval platform, is going to become as obsolete as Blockbuster and Tower Records. What you've outlined focuses on contextual and structural elements like websites, pages and ad slots that I'm guessing will largely disappear over the next couple of years. I think the capabilities outlined are going to be essential, but how and to what they are applied is going to quickly and fundamentally be different from what we currently do.